A nice part of my job is that I occasionally get time to experiment with small prototypes for new trends in enterprise mobility. Prototypes don’t necessarily evolve into real products, and sometimes they serve only to discover the wrong way of doing something. But they are an important facet of software development and they’re fun to work on.

Recently I’ve been thinking about how to build a Cloud based solution that can react to events happening in your workplace, and then perform mobile workflows on your behalf based on simple rules that you give to the system.

This idea is not new. Microsoft Flow and IFTTT are both well known examples of this concept. I started to think what the back end of such a system might look like. From an architecture perspective what are the right patterns to follow. From a technology perspective what are the best frameworks to use for its implementation.

Use Case

I picked a simple use case to implement. Imagine someone with a mobile device at work who wants to get a push notification telling her when anyone else views or updates project documents in the organization’s Content Repository (such as SharePoint).

Architecture Choices

A common Component Model should be used by all the elements of a mobile workflow. Whether it is adding a Contact to LinkedIn, reading an audit message from a database, or sending out a push notification: any of these steps in a mobile workflow need a way to be “plugged” together in an orchestrated fashion so that data can easily flow out of one into into another. Also it should be easy to build new components that use the same component model and can be added to existing workflows.

Loosely coupled execution of workflow steps is important. All steps that execute on the back end should be “publish-subscribe” in nature. There should be no direct API calls between components in the workflow. Systems like that scale very well because you can add more “workers” that specialize in a low level task who all subscribe to the same work queue.

Technology Choices

For a Component Model I used Apache Camel. It appealed to me because it is an implementation of Enterprise Integration Patterns and it provides the concept of Message Endpoints. Apache Camel has lots of Components already. It is actually very mature (i.e. old!) but it fits what I am looking for, so why not give it a shot.

For loosely coupled execution I used Apache Kafka. Kafka is a publish-subscribe message queue, and there are lots of those out there of course (like Active MQ or RabbitMQ). After just a few minutes I discovered that Kafka is awesome. There is a lot to like about it, especially its cluster design and partitioned topics. But what I really liked was the Kafka QuickStart. Just a simple text file with a few cut-n-paste steps in a Unix command shell. Always a good sign.

For business logic used in workflow components I used Java on Apache Karaf. This is just my own implementation preference. There is nothing about Apache Camel or Apache Kafka that requires Apache Karaf. I just like Apache Karaf as a way to build Service logic.

Prototype

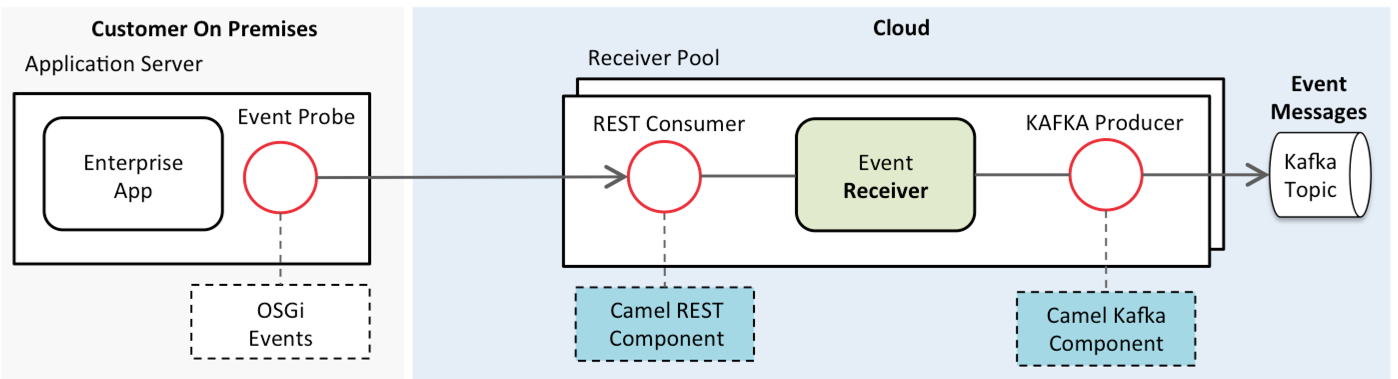

I already had a server application On Premises that provides access to documents in a Content Repository for mobile device users. So I started by building a simple probe that detects auditing events being generated by the server application (in this case they were OSGi Events) and broadcasts these events to an Event Receiver in the Cloud.

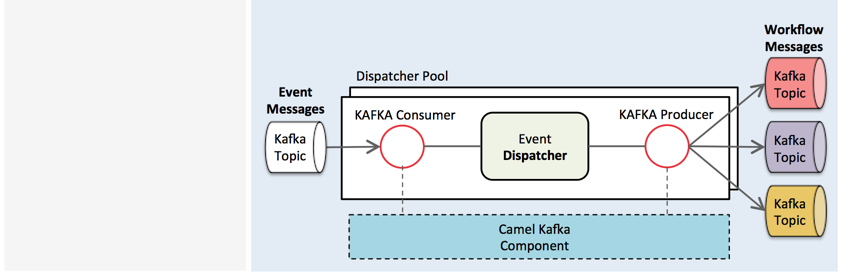

The Event Receiver uses the Apache Camel REST Component to consume messages in a JSON format and the Apache Camel Kafka Component to produce those messages onto a general Kafka Topic. The Event Receiver does not do any actual work. Its job is merely to ingest audit events, and it can be scaled independently to handle incoming load.

An Event Dispatcher in the Cloud is responsible for triaging events and deciding which workflows need to know about that event. It uses the Apache Camel Kafka Component to consume events from the general Kafka Topic, and then to produce them onto other Kafka Topics dedicated to particular mobile workflows. While doing this it can transform these messages to add data pertinent to that workflow, such as to add user details needed for a push notification. Just like the Event Receiver, the Event Dispatcher can be scaled independently.

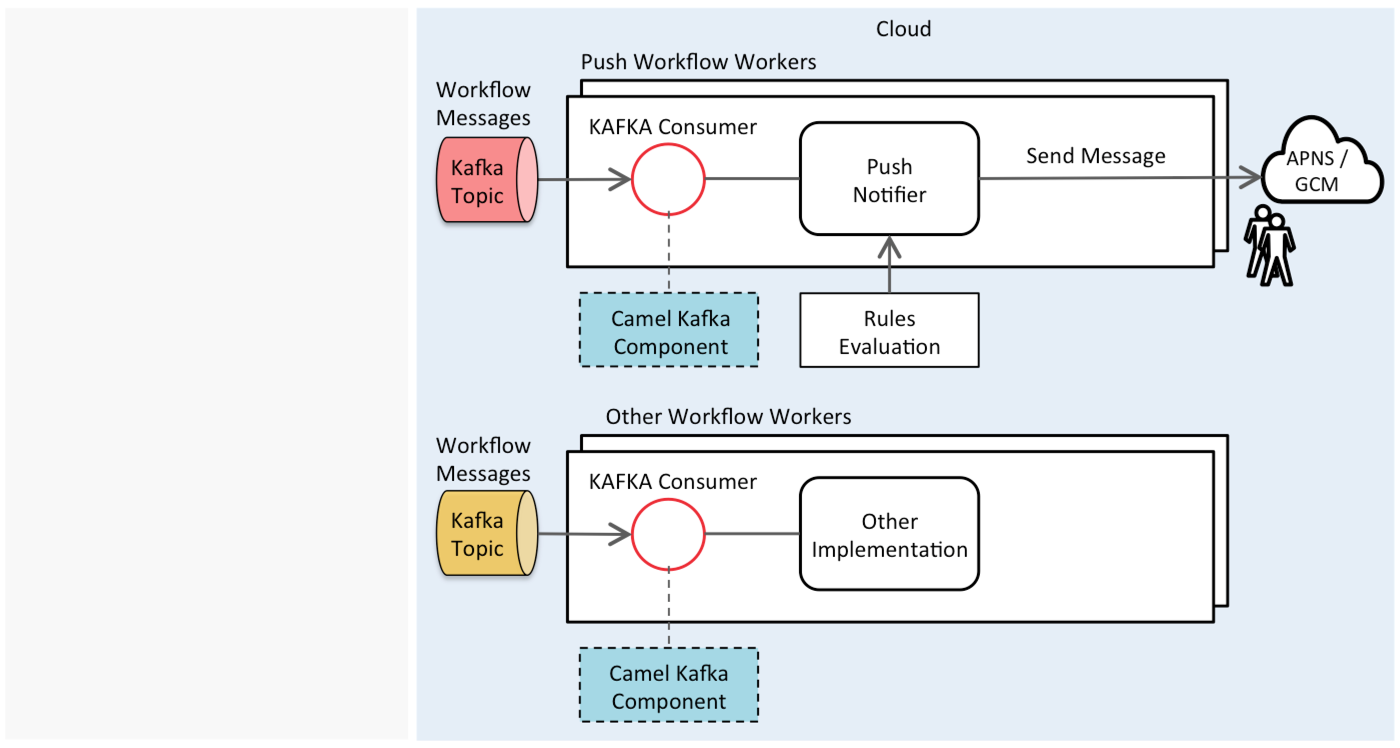

Finally Workers in the Cloud are responsible for consuming batches of workflow messages from Kafka Topics and performing the actual workflow. One Worker implements a Push Notification workflow that sends APNS or GCM notifications based on rules created by end users who specify the types of event for which they want to be notified. The business logic for sending Push Notifications is also built as a Camel Component that exposes endpoints so that it could be reused in any other workflow.

It worked!

I have an App on Android that I can use to browse documents a Content Repository:

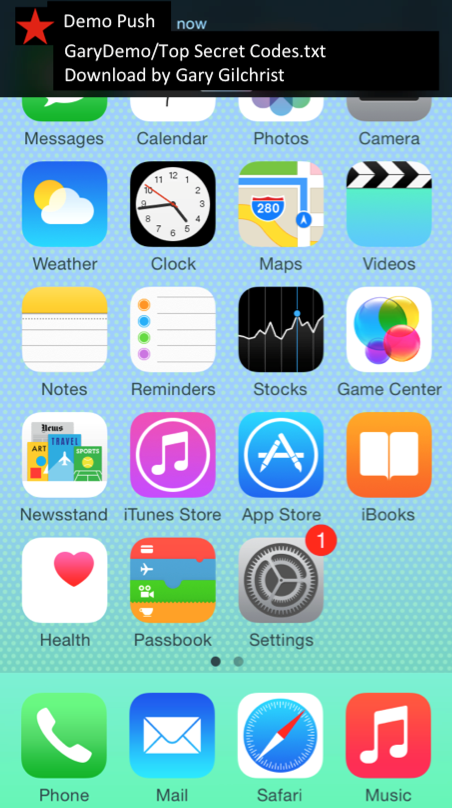

Now when I open this document on my Android device, I get a notification on my iPhone:

Conclusion

That’s a very convoluted way to send a push notification! Also content repositories already have event based mechanisms that you can use to integrate with workflows (like Observation in JCR). But it’s just an example of one mobile workflow, and the same approach might be used to implement any other workflow. For next steps in the prototype, I think the concept of Microservices looks like interesting way to build workflow components.

very, very interesting concept

look forward to more of this